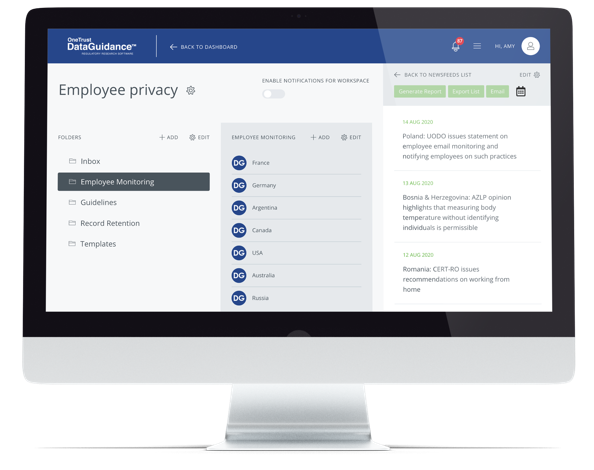

Continue reading on DataGuidance with:

Free Member

Limited ArticlesCreate an account to continue accessing select articles, resources, and guidance notes.

Already have an account? Log in

Canada: AI and Data Act - Key takeaways

On 16 June 2022, the Government of Canada introduced in the House of Commons the Artificial Intelligence and Data Act ('AIDA') as part of Bill C-27, for An Act to enact the Consumer Privacy Protection Act, the Personal Information and Data Protection Tribunal Act and the Artificial Intelligence and Data Act and to make consequential and related amendments to other Acts, also known as the Digital Charter Implementation Act 2022 ('DCIA 2022').

In this Insight article, OneTrust DataGuidance Research provides an overview of the AIDA, which is currently undergoing second reading in the House of Commons, and the wider perceptions of artificial intelligence ('AI') at a federal level in Canada. For an analysis of the DCIA 2022, you may read our Insight article Canada: Digital Charter Implementation Act 2022 - What you need to know.

Background of the AIDA

The AIDA Companion Document, released by the Canadian Government, sets out the backdrop against which the AIDA was tabled. Notably, the AIDA Companion Document highlights that despite the uses of AI becoming ubiquitous, there are still few clear standards, making it difficult for consumers to trust AI and for businesses to demonstrate that they are using it responsibly. In particular, the AIDA Companion Document notes some high-profile harmful or discriminatory incidents that have contributed to a loss of trust surrounding AI, including AI screening by large multinational companies to shortlist candidates which discriminated against women, facial recognition systems that were biased against women and people of colour, and AI systems used to create 'deepfakes'.

Overall, the AIDA Companion Document recognises that the AIDA is one of the first national regulatory frameworks for AI to be proposed and is designed to protect individuals and communities from the adverse impacts associated with high-impact AI systems, and to support the responsible development and adoption of AI across Canada. In this, the AIDA follows the EU Proposal for a Regulation Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and amending certain legal acts ('the AI Act'), by taking a risk-based approach supported by industry standards developed over the coming years.

Scope

According to the AIDA Companion Document, the AIDA seeks to safeguards Canadians, while also ensuring the development of responsible AI in Canada. Specifically, the AIDA aims to regulate international and interprovincial trade and commerce in AI systems by establishing common requirements, applicable across Canada, for the design, development, and use of those systems, and to prohibit certain conduct in relation to AI systems that may result in serious harm to individuals or harm their interests.

Obligations

The AIDA lays down requirements applicable to 'regulated activities' in general, with more restrictive requirements targeting high-impact AI systems (please see below for more information).

With regard to the former, the AIDA defines 'regulated activity' as 'any of the following activities carried out in the course of international or interprovincial trade and commerce:

- processing or making available for use any data relating to human activities for the purpose of designing, developing or using an [AI] system;

- designing, developing or making available for use an [AI] system or managing its operations'.

Furthermore, the AIDA defines 'AI system' as 'a technological system that, autonomously or partly autonomously, processes data related to human activities through the use of a genetic algorithm, a neural network, machine learning or another technique in order to generate content or make decisions, recommendations or predictions'.

Notably, the AIDA stipulates that persons carrying out regulated activities and who make anonymised data available for use in the course of the activity, must establish measures with respect to the manner in which data is anonymised, and the use or management of anonymised data.

More generally, the AIDA requires that persons must keep records of any measures relating to anonymisation, mitigation measures, and measures to monitor compliance, alongside the reasons supporting their self-assessment regarding whether they are a 'high-impact AI system'.

High-impact AI systems

Further to the above, specific obligations are triggered when an AI system is deemed to be 'high-impact'. Notably, the AIDA defines 'high-impact AI system' as an AI 'system that meets the criteria for a high-impact system that are established in regulations'. However, the AIDA Companion Documents clarifies that regulations defining which systems would be considered 'high-impact systems' will be developed in consultation with a broad range of stakeholders to ensure they are effective at protecting the interests of the Canadian public, while avoiding an undue burden on the Canadian AI ecosystem.

Nonetheless, the AIDA Companion Document clarifies that the Canadian Government considers the following factors to be useful in determining whether an AI system is high-impact:

- evidence of risks of harm to health and safety, or a risk of adverse impact on human rights, based on both the intended purpose and potential unintended consequences;

- the severity of potential harms;

- the scale of use;

- the nature of harms or adverse impacts that have already taken place;

- the extent to which, for practical or legal reasons, it is not reasonably possible to opt-out from that system;

- imbalances of economic or social circumstances, or age of impacted persons; and

- the degree to which the risks are adequately regulated under another law.

Further, the AIDA Companion Document provides examples of different systems and their potential impacts:

- Screen systems impacting access to services or employment - AI systems that make decisions, recommendation, or predictions for services, including credit or employment. The impacts have potential discriminatory and economic harms.

- Biometric systems used for identification and interference - AI systems that use biometric data to make predictions, such as identifying a person remotely, or behavioural or psychological predictions. Such systems may have significant impacts on mental health.

- Systems influencing human behaviour at a scale - AI-powered content recommendation systems that can influence human behaviour, expression, and emotion on a large scale. There are potential psychological and physical health impacts from using such systems.

Further, the AIDA requires that persons responsible for a high-impact AI system must establish measures to identify, assess, and mitigate the risks of harm or biased output that could result from the use of the system. In this regard, the AIDA addresses two types of adverse effects associated with high-impact AI systems, namely 'harm' to individuals and 'biased outputs'.

With regard to the former, 'harm' is defined under the AIDA as '(a) physical or psychological harm to an individual; (b) damage to an individual's property; or (c) economic loss to an individual'. Further, the AIDA Companion Document clarifies that 'harm' includes physical harm, psychological harm, damage to property, or economic loss to an individual. Further, 'harm' is intended to encapsulate a broad range of adverse impacts that may result across economic sectors, while harms may also be experienced by individuals or across groups of individuals, thereby increasing the severity of the impact. For example, the AIDA Companion Document outlines that vulnerable groups include children, who may face greater risk of harm from high-impact AI systems and therefore necessitate specific risk mitigation measures.

'Biased output', on the other hand, is defined in the AIDA as 'content that is generated, or a decision, recommendation or prediction that is made, by an AI system and that adversely differentiates, directly or indirectly and without justification, in relation to an individual on one or more of the prohibited grounds of discrimination set out in the Canadian Human Rights Act (1985), or on a combination of such prohibited grounds'. Further, the AIDA clarifies that the term in question 'does not include content, or a decision, recommendation or prediction, the purpose and effect of which are to prevent disadvantages that are likely to be suffered by, or to eliminate or reduce disadvantages that are suffered by, any group of individuals when those disadvantages would be based on or related to the prohibited grounds'. For example, the AIDA Companion Document notes that individual income often correlates to prohibited grounds, such as race and gender, but that income is also relevant to decisions related to credit. Accordingly, the AIDA Companion Document notes that the challenge in such instance is to ensure that AI systems do not use prohibited categories, such as race and gender, as proxy indicators of creditworthiness, using the underlying correlation to produce unfair results for specific individuals.

Generally, the AIDA prescribes that persons responsible for high-impact AI systems must establish measures to monitor compliance with mitigation measures aimed at preventing harms and biased output.

Under the AIDA, persons who make high-impact AI systems available for use and persons who manage the operation of a high-impact AI system, must publish on a publicly available website, a plain language description of the system that includes an explanation of:

- how the system is intended to be used;

- the types of content that it is intended to generate and the decisions, recommendations, or predictions that it is intended to make;

- the mitigation measures established; and

- any other information that may be prescribed by regulation.

Guide for compliance

The AIDA Companion Document anticipates that businesses would be required to establish suitable accountability mechanisms to guarantee adherence to their legal responsibilities as stipulated in the AIDA.

To this end, the AIDA Companion Document outlines the following principles to guide the implementation of obligations under the AIDA:

- Human oversight and monitoring - High-impact systems must be designed and developed in such a way to enable people managing the operations of the system to exercise meaningful oversight, with a level of interpretability appropriate to the context.

- Transparency - Providing the public with appropriate information about how high-impact AI systems are being used, with the information sufficient to allow the public to understand systems capabilities, limitations, and potential impacts.

- Fairness and equity - Building AI systems with an awareness of the potential for discriminatory outcomes, with appropriate actions taken to mitigate discriminatory outcomes.

- Safety - High-impact AI systems must be proactively assessed to identify harms that could result from the use of the system, including foreseeable misuse.

- Accountability - Organisations should put in place governance mechanisms to ensure compliance, including proactive documentation of policies, processed, and measures.

- Validity and robustness - Analysing whether high-impact AI systems perform consistently, and whether they are stable and resilient in a variety of circumstances.

Moreover, the AIDA Companion Document provides different measures that may apply at each stage of the AI lifecycle:

- system design:

- performing an initial assessment of potential risks associated with the use of an AI system in the context and deciding whether the use of AI is appropriate;

- assessing and addressing potential biases introduced by the dataset selection; and

- assessing the level of interpretability needed and making design decisions accordingly;

- system development:

- documenting datasets and models used;

- performing evaluation and validation, including retraining as needed;

- building in mechanisms for human oversight and monitoring; and

- documenting appropriate use(s) and limitations;

- making a system available for use:

- keeping documentation regarding how the requirements for design and development have been met;

- providing appropriate documentation to users regarding datasets used, limitations, and appropriate uses; and

- performing a risk assessment regarding the way the system has been made available; and

- managing the operations of a system:

- logging and monitoring the output of the system as appropriate in the context;

- ensuring adequate monitoring and human oversight; and

- intervening as needed, based on operational parameters.

Enforcement

The AIDA Companion Documents provides that, in the initial years after its entry into force, the focus of the AIDA would be on education, establishing guidelines, and helping businesses come into compliance through voluntary means, granting businesses a transition period to adjust to the new framework before enforcement actions are undertaken.

Specifically, the Minister of Innovation, Science, and Industry would be responsible for the administration and enforcement of all parts of the AIDA, except those parts that involve prosecutable offences. At the same time, the AIDA also prescribes the creation of a senior official, known as the Artificial Intelligence and Data Commissioner ('AI and Data Commissioner'), whose role is to assist the Minister in the administration and enforcement of the AIDA.

Notably, under the AIDA, audits with respect to possible contraventions can be conducted by independent auditors, and the legal person audited must give all assistance that is reasonably required to enable the auditor to conduct the audit, including by providing any records or other information specified by the audit. Further, the cost of the audit is payable by the person audited.

In terms of sanction, the AIDA outlines that, where an AI system could result in harm or biased output, or where a contravention may have occurred, action may be taken by the Minister to order:

- the production of records to demonstrate compliance; or

- an independent audit.

Where there is a risk of imminent harm, the AIDA Companion Document provides that action may be taken by the Minister to:

- order cessation of use of a system; or

- disclose publicly information regarding contraventions of the AIDA or for the purpose of preventing harm.

Moreover, persons found to have committed a violation under the AIDA are also liable to administrative monetary penalties, established by regulations.

Finally, the AIDA also provides for offences related to AI systems.

First, under Article 30(1) of the AIDA, any person who contravenes Articles 6 to 12 of the AIDA or obstructs or provides false or misleading information to the Minister, and anyone acting on their behalf, or an independent auditor, is deemed to be guilty of an offence and is liable:

- on conviction on indictment, to a fine:

- of not more than the greater of CAD 10 million (approx. €6,783,750) and 3% of the person's gross global revenues in its financial year before the one in which the person is sentenced, in the case of a person who is not an individual; and

- at the discretion of the court, in the case of an individual; or

- not more than the greater of CAD 5 million (approx. €3,392,430) and 2% of the person's gross global revenues in its financial year before the one in which the person is sentenced, in the case of a person who is not an individual; and

- not more than CAD 50,000 (approx. €33,910), in the case of an individual.

Secondly, under Articles 38 to 40, the AIDA creates three new criminal offences, namely:

- knowingly possessing or using unlawfully obtained personal information to design, develop, use, or make available for use an AI system;

- making an AI system available for use, knowing, or being reckless as to whether it is likely to cause serious harm or substantial damage to property, where its use actually causes such harm or damage; and

- making an AI system available for use with intent to defraud the public and to cause substantial economic loss to an individual, where its use actually causes that loss.

Specifically, under the criminal offences above, persons are liable:

- on conviction on indictment to a fine not greater than CAD 25 million (approx. €16,965,000) and 5% of the person's gross global revenues and to a fine in the discretion of the court or to a term of imprisonment of up to five years; or

- when liable on summary conviction, to a fine not greater than CAD 20 million (approx. €13,572,800) and 4% of the person's gross global revenue, and to a fine of not more than CAD 100,000 (approx. €67,860) or a term of imprisonment of up to two years in the case of an individual.

Criminal offences under the AIDA fall within law enforcement and the Public Prosecution Service of Canada's remit.

Next steps

The AIDA Companion Documents highlights that going forward, following royal ascent of the DCIA, the Canadian Government is aiming to conduct a consultation of industry, academia, and civil society, to inform the implementation of the AIDA and its regulations. More specifically, such consultation is expected to address:

- types of AI systems that should be considered as high-impact;

- types of standards and certifications that should be considered in ensuring that AI systems meet the expectations of Canadians;

- priorities in the development and enforcement of regulations, including the enforcement of monetary penalties;

- the work of the AI and Data Commissioner; and

- the establishment of an advisory committee.

Lastly, the AIDA Companion Document provides that, following the abovementioned consultation, the Canadian Government aims to pre-publish draft regulations and conduct another consultation for 60 days.

Harry Chambers Senior Privacy Analyst

[email protected]