Continue reading on DataGuidance with:

Free Member

Limited ArticlesCreate an account to continue accessing select articles, resources, and guidance notes.

Already have an account? Log in

EU: Navigating the AI Act - key practical considerations for users and other AI actors

Understanding the obligations inherent under the EU Artificial Intelligence Act (the AI Act) is paramount for users and other actors navigating this dynamic landscape.

The AI Act predominantly imposes obligations on 'providers' (developers) rather than on 'users' (deployers) of high-risk artificial intelligence (AI) systems. While some of the risk posed by the systems listed in Annex III comes from how they are designed, significant risks stem from how they are used. This means that providers cannot comprehensively assess the full potential impact of a high-risk AI system during the conformity assessment, and therefore that users must have obligations to uphold fundamental rights as well. The first part of this series on the AI Act explored what types of AI are covered and what obligations are applicable to each AI actor. The second part of this series offered a brief explanation of the profound importance of providers' comprehending and adhering to the provider obligations. In the third and final article of this series, Sean Musch and Michael Charles Borrelli, from AI & Partners, and Charles Kerrigan, from CMS UK, explore the significance of comprehending these provider obligations and places them in the broader context of the ever-evolving AI terrain.

How does the AI Act regulate 'users' of high-risk AI system?

The AI Act predominantly imposes requirements on providers rather than on the users of high-risk AI. For the vast number of high-risk AI uses in Annex III, compliance with the AI Act's requirements (Articles 8-15) is self-assessed by the providers themselves, in accordance with Article 43(2).

The AI Act imposes minimal obligations on users of high-risk AI systems. Article 29 outlines the duties of users of high-risk AI: to use the system in conjunction with the providers' 'instructions of use,' ensuring relevant data, and monitoring of the system. However, the user is not required to undertake any further measures to assess the potential impact on fundamental rights, equality, accessibility, public interest, or the environment, to consult with affected groups, nor take active steps to mitigate potential harms.

Why do users need obligations under the EU AI Act?

The following outlines why the AI Act needs obligations on users of high-risk AI systems:

Foresight of AI harms in the context of use as well as design

A noted criticism of the current AI Act approach is that it overlooks the complexity of AI systems and the importance of the context within which they are used to be able to assess the impact on fundamental rights, people, and society. This is especially true for 'standalone' AI systems defined under Article 6(2), which showcase a wide and more complex range of risks than for products. Accordingly, legislative approaches geared toward product safety will be insufficient to address these wider implications for fundamental rights. While the provider-led conformity assessment process may unearth the main technical shortcomings of the system, this process is fundamentally ill-suited to identify the risks in the context of deployment.

As an example, a facial authentication system may meet the technical requirements specified in the AI Act yet still pose significant fundamental rights violations, compromise data protection and non-discrimination law, and disproportionate surveillance in the context of deployment (i.e., in a specific shopping center) creating chilling effects on the enjoyment of fundamental rights.

Moreover, the requirements on providers in the AI Act are highly technical in nature and are thus insufficient as a mechanism to prevent or mitigate risks to fundamental rights, structural harms, or economic or environmental shifts engendered by the introduction of AI systems in certain contexts. Such aspects are inherently better assessed by the users in light of the context of the deployment of the AI system.

Facilitating accountability of users of high-risk AI

While some of the risks posed by the systems listed in Annex III arise from how they are designed, significant risks originate from how, and the purpose for which, they are used. This means that providers cannot comprehensively assess the full contextual impact of a high-risk AI system during the conformity assessment, and therefore that users of high-risk AI must be assigned obligations in the AIA to uphold fundamental rights in addition.

Countering the dominance of AI providers

Obligations on users of high-risk AI systems would also counter an over-focus on providers of AI systems as the main governance mechanism. The assumption, underpinned by the regulatory proposal, that AI providers can fix all potential issues related to the use of AI systems largely reinforces the dominant role of large technology firms as AI providers, to entirely determine the terms of public service provision. The AI Act assigns the responsibility to detect and mitigate risks to fundamental rights and other possible harms to these private actors, irrespective of whether or not they have the relevant expertise, resources, and vested interest to do so.

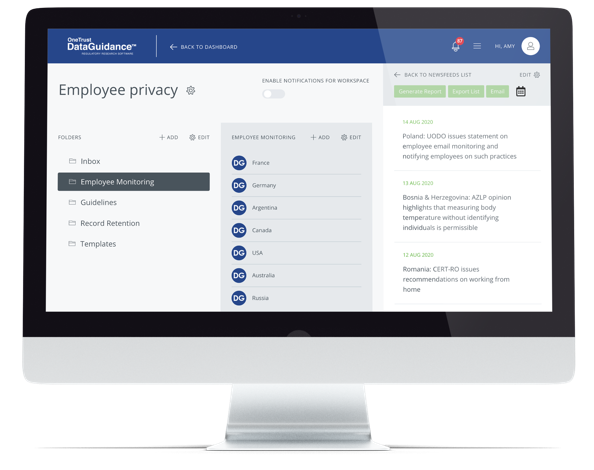

Data privacy legislation overlap

The obligations imposed on users of high-risk AI systems under the AI Act demonstrate a clear intersection with data privacy laws. The AI Act, while primarily focusing on providers in terms of compliance and conformity assessments, indirectly implicates users by incorporating certain provisions that align with and reinforce existing data privacy regulations within the EU.

One key aspect of this overlap lies in the duty of users, as outlined in Article 29 of the AI Act, to ensure the relevant data when using high-risk AI systems. This requirement inherently touches upon data privacy considerations, emphasizing the importance of handling and processing data in a manner consistent with established data protection principles. Users must adhere to the providers' instructions of use, which implies a responsibility to handle personal and sensitive data in accordance with applicable data protection laws, such as the General Data Protection Regulation (GDPR) in the EU.

Furthermore, the AI Act's emphasis on monitoring the system aligns with the broader scope of data privacy laws that mandate continuous oversight and protection of personal data. Users are expected to actively monitor the AI system's operations, which includes ensuring that data processing activities comply with privacy laws and that any potential risks to data subjects are identified and addressed promptly.

The link between the AI Act and data privacy laws becomes more pronounced when considering the potential harms associated with AI deployment, such as fundamental rights violations and compromise of data protection laws. The example provided in the document, involving a facial authentication system in a specific context, underscores the significance of assessing and mitigating risks related to privacy and non-discrimination laws, issues intricately tied to data protection.

Fines for non-compliance

The AI Act sets out a strict enforcement regime for non-compliance. There are three notational levels of non-compliance, each with significant financial penalties. Depending on the level of violation (in line with the risk-based approach), the AI Act applies the following penalties:

Breach of AI Act prohibitions: Fines up to €35 million or 7% of total worldwide annual turnover (revenue), whichever is higher.

- Non-compliance with the obligations set out for providers of high-risk AI systems, authorized representatives, importers, distributors, users, or notified bodies: Fines up to €15 million or 3% of total worldwide annual turnover (revenue), whichever is higher.

- Supply of incorrect or misleading information to the notified bodies or national competent authorities in reply to a request: Fines up to €7.5 million or 1.5% of total worldwide annual turnover (revenue), whichever is higher.

In the case of small and medium-sized enterprises, fines will be as described above, but whichever amount is lower.

Conclusion

The AI Act places a substantial burden on providers rather than users in the realm of high-risk AI systems. While users have minimal obligations outlined in Article 29, the convergence of the AI Act with data privacy laws and the strict enforcement regime highlights the crucial role users play in ensuring responsible AI deployment.

Practical considerations for users

Users of high-risk AI systems are tasked with implementing the providers' instructions of use, ensuring relevant data, and monitoring system operations. In practice, this involves a deep understanding of the AI system's intricacies, capabilities, and potential societal implications. Users must actively collaborate with providers to establish effective usage strategies, particularly in the context of standalone AI systems that carry complex risks. Practical considerations extend to continuous monitoring, necessitating an ongoing commitment to overseeing the system's operations and data processing activities.

Challenges in meeting obligations

Challenges for users stem from the complexity of AI systems, particularly standalone ones, which may elude the technical focus of the conformity assessment process. Users may struggle to foresee potential harm in specific deployment contexts, such as the example of a facial authentication system in a shopping center. The AI Act's technical requirements may be insufficient to address wider implications for fundamental rights, necessitating users to assess risks in the context of deployment. Bridging the gap between technical specifications and real-world risks poses a significant challenge for users.

Recap and importance of compliance

The recap emphasizes the fundamental reasons for users to meet their obligations under the AI Act. Users' obligations are not just legal requirements; they are essential for safeguarding fundamental rights, mitigating potential harms, and ensuring responsible AI deployment. The facial authentication system example underscores that compliance is crucial for preventing fundamental rights violations, data protection breaches, and disproportionate surveillance. Compliance is a moral and ethical responsibility that goes beyond the legal framework, contributing to the establishment of trust in AI technologies and their positive impact on society.

Call to action for users

The call to action for users is two-fold. Firstly, users must actively engage in understanding the broader implications of AI deployment, especially in specific contexts, and not merely rely on providers' technical assessments. Users should proactively collaborate with providers, assess risks in deployment contexts, and consider the societal impact of AI systems. Secondly, users need to recognize that their obligations under the EU AI Act are integral to countering the dominance of AI providers and ensuring a more balanced governance mechanism. By actively participating in ethical AI use, users can contribute to a responsible and inclusive AI landscape.

Sean Musch Co-CEO/CFO

[email protected]

Michael Charles Borrelli Co-CEO/COO

[email protected]

AI & Partners, Amsterdam

Charles Kerrigan Partner

[email protected]

CMS UK, London