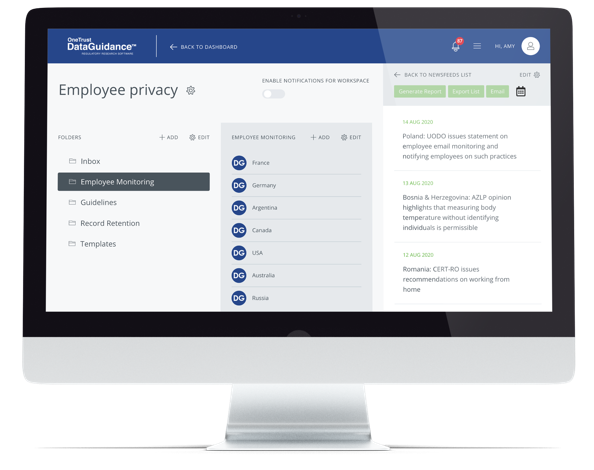

Continue reading on DataGuidance with:

Free Member

Limited ArticlesCreate an account to continue accessing select articles, resources, and guidance notes.

Already have an account? Log in

International: AI, privacy, and security - part two: Navigating legal, technical, and ethical challenges in healthcare AI

From enhanced diagnostic precision to improved treatment efficiency, from new drug discovery to appointment scheduling, artificial intelligence (AI) is revolutionizing healthcare as we know it. As is regularly the case with disruptive technologies, however, there are significant risks which may arise from the use of AI in healthcare. In part one of this insight series, Dr. Paolo Balboni, Noriswadi Ismail, Davide Baldini, and Kate Francis, of ICT Legal Consulting, delved into the growing influence of AI in areas such as recruitment, talent management, and cybersecurity. In part two, they outline potential concerns which may arise from the use of AI in the provision of health services. These concerns are legal, technical, and ethical and must necessarily be duly considered by developers and deployers of AI systems for the benefits of AI in healthcare to be reaped by society while mitigating to the extent possible relevant high-stakes risks that may arise.

Selected use cases of AI and healthcare

Data re-use

To be truly transformative, accurate, and sophisticated, AI systems require vast amounts of quality data. The reuse of high-quality training sets has the potential to generate cost and time savings and may allow for better precision and reliability. In healthcare, for example, the reuse of data permits the analysis of rare medical conditions for which data may be limited. The reuse of datasets is increasingly desirable in situations where problems related to accessibility and real-time access to data persist. The reuse of data also permits time and cost savings for organizations, opening further possibilities for innovation and scientific advancement for the betterment of society.

There are, however, challenges associated with reusing health-related data in AI applications. For example, compliance with the General Data Protection Regulation (GDPR) is essential, as it governs the processing of health data. This includes adherence to principles such as lawfulness, transparency, fairness, integrity, confidentiality, accuracy, data minimization, purpose and storage limitation, and automated decision-making.

Health data, biometric data, and genetic data are classified as 'special' under the GDPR, entailing enhanced protections due to their potential to impact individuals' rights and freedoms negatively. Relevant risks of processing such data include, inter alia, discrimination, identity theft, fraud, and emotional detriment and suffering. While the GDPR generally prohibits the processing of special category data under Article 9(1), Article 9(2) provides for exceptions that may be relied upon to process such data. These include explicit consent (Article 9(2)(a) of the GDPR) and processing for archiving purposes in the public interest, scientific or historical research purposes, or statistical purposes in accordance with Article 89(1) of the GDPR (Article 9(2)(j) of the GDPR), explored further in the next section.

It is not always possible to rely on the explicit consent of data subjects under Article 9(2)(a) of the GDPR for secondary use of data, where informed consent presents difficulties. Explicit consent, which can be withdrawn by the data subject, raises legal and ethical considerations relating to personal data protection, individual autonomy, and even property rights. To collect the explicit consent of patients, clear and transparent information must be provided to allow them to understand the ramifications of sharing their health data.

Individuals may not be able to fully comprehend the multitude of ways in which their personal data may be used, who it may be shared with, and how much sharing and processing may impact them. Informed consent procedures may also be costly and require significant time to collect or it may simply prove impossible to contact the individuals to request the provision of their consent.

Medical patients represent a notoriously vulnerable population, limiting their availability to make fully informed and autonomous decisions when they are experiencing a difficult health situation, which is further exacerbated by the imbalance of power between the patient and the medical professional. The European Data Protection Board (EDPB) in its Opinion 3/2019 clarifies that consent should not be relied upon when there is a clear power imbalance in the context of clinical trials research, and if it is relied upon, its reliance must be thoroughly assessed for its 'appropriateness.' In cases where consent was originally relied upon, and a data controller wishes to 'repurpose' the personal data relying on another legal basis, there remains legal uncertainty pending the EDPB's Guidelines on the processing of personal data for scientific research purposes.

The reuse of personal data may entail processing it for purposes that were not known or determined when such data was collected, which challenges the principle of purpose limitation. While the GDPR's principle of purpose limitation under Article 5(1)(b) calls for personal data to not be further processed in ways that are incompatible with the original purpose of collection, it allows for further processing for archiving purposes in the public interest, scientific or historical research purposes or statistical purposes under Article 89(1) of the GDPR. It must therefore be noted that despite the many rules that must be taken into consideration on a case-by-case basis, training AI with health data, even if collected for another purpose, is not inherently incompatible with the provisions of the GDPR.

The French Data Protection Authority (CNIL) has affirmed that datasets - and in particular those that are publicly accessible - may be reused for the purpose of training AI where it has been verified that the data was collected in a lawful manner and the re-use purpose is compatible with the original purpose of collection. The GDPR, in fact, aims to protect fundamental rights. As recital 4 GDPR recalls, data processing is intended to 'serve mankind.' Coherently, scientific advancements facilitated by data processing would fulfill such an objective. However, it must be recalled that when an organization relies on presumed compatibility under Article 5(1)(b) of the GDPR to further process data for research purposes in diverse projects, compatibility may only be presumed when adequate safeguards under Article 89(1) are implemented. It is, therefore, necessary to carefully evaluate and ensure the successful implementation of measures that are suited to protect the fundamental rights and freedoms of those whose data are being processed from undue interference. These measures, which may be technical and organizational, include but are not limited to pseudonymization, anonymization, and data minimization.

In order to increase the availability of health data, the European Health Data Space (EHDS), launched by the European Commission on May 3, 2022, specifically aims to establish a system of common rules for primary and secondary uses of health data. It creates infrastructure, standards, and practices, which will both empower individuals by way of a single market for health record systems, medical devices, and high-risk AI systems, and aims to foster innovation, efficiency, research, and policy-making through the secondary use of health data, potentially leading to new treatments, medical devices, and better care. With the creation of the EHDS, Europe has established a solid legal framework for the use of health data, building on the General Data Protection Regulation (GDPR), the Data Governance Act, the Data Act, and the Directive on Security of Network and Information Systems.

Research and development

EU law and the GDPR specifically aim to facilitate research and societal progress while protecting the fundamental rights and freedoms of individuals. The GDPR contains a special regime for data processing in the context of scientific research, clarified by the European Data Protection Supervisor (EDPS) in 'A Preliminary Opinion on data protection and scientific research' of January 2020 as applying then each of the following three criteria are met:

- personal data is processed;

- sectoral standards and ethics apply (think informed consent, oversight, etc.); and

- the research is carried out to improve societal well-being and collective knowledge.

Recital 159 of the GDPR permits a broad interpretation of the processing of personal data for scientific research purposes which includes technological development and demonstration as well as various types of research, including about AI. Nevertheless, the type of personal data involved, the risks of its processing, the type of research being carried out, applicable ethical standards, and the safeguards put in place to protect individuals must always be evaluated on a case-by-case basis by the data controller.

To process health data, the organization must have a legal basis under Article 6 of the GDPR and a separate exemption under Article 9 of the GDPR. As suggested in the previous section, health data may be processed for scientific research purposes for either primary or secondary use. Data collected specifically for a clinical trial would constitute primary use. Processing that is carried out on the clinical trial data and reused, e.g., to train an AI, would be considered secondary use. This distinction is relevant to determine the legal basis to be relied upon, transparency requirements, and compliance with the principle of purpose limitation in the GDPR, among others.

To carry out data processing activities in compliance with EU data protection rules, it is first necessary that the most appropriate legal basis for carrying out processing is identified. For research and development, appropriate legal bases may include consent or scientific research purposes. Legal bases other than consent may be relied upon when appropriate safeguards are applied (i.e., those required under Article 89(1) of the GDPR) and the processing of personal data, including special category data, complies with the principles of data minimization, lawfulness, fairness, and transparency.

The exemption provided under Article 9(2)(j) of the GDPR - commonly known as the 'research exemption' - permits special categories of data to be processed for such purposes in accordance with Article 89(1) of the GDPR based on EU or Member State Law. Unfortunately, due to differences in Member State laws, it is not always possible to have a harmonized approach across the EU when it comes to the question of processing personal data for research purposes. Moreover, organizations must be able to justify and demonstrate the scientific nature of the research and the fact that it is in the interest of the public. Article 89(1) of the GDPR furthermore requires that safeguards are implemented to protect the rights and freedoms of data subjects and to ensure that the principle of data minimization is complied with. Such safeguards include technical and organizational measures and may include pseudonymization and anonymization.

Organizations should recall that the EDPB in its 'Document on response to the request from the European Commission for clarifications on the consistent application of the GDPR, focusing on health research' adopted on February 2, 2021, clarifies that when a legal basis other than consent is relied upon, and coherently another exemption in Article 9(2) of the GDPR is relied upon, it is still necessary to comply with the 'ethical' requirement of requesting informed consent to participate in the medical research. According to the EDPB, such an action may also be seen as an additional safeguard under Article 9(2) of the GDPR.

The Article 29 Working Party (WP29) Guidelines on Consent furthermore clarify that when consent is relied upon to conduct research, such consent should be separate from consent requirements derived from other relevant ethical standards or procedural obligations, also noting that the GDPR does not require consent to be used as a legal basis for research-related data processing. Furthermore, when consent is relied upon, its conditions must be carefully evaluated for its specificity and transparency.

Compliance with the principle of transparency entails making individuals aware of risks presented by the processing of their personal data. As suggested above, transparency is also essential for any consent requested from the data subject to be specific and to inform them of how such consent can be withdrawn. Information should be tailored to the patient or research participant to allow them to genuinely comprehend the processing their data will be subject to and how their rights and freedoms may be affected.

The EU data protection authorities (DPAs) have taken a strict approach to the interpretation of transparency requirements under the GDPR in the context of research. Articles 13 and 14 of the GDPR and their exemptions should also be interpreted restrictively by data controllers.

Before further processing personal data for secondary purposes, such as for research and development where, e.g., the data were originally collected for the provision of healthcare services, information should be provided to the data subjects. There is, however, an exception to the obligation to provide information to data subjects under Article 14(5)(b) of the GDPR for scientific research purposes when data were not collected directly from the data subjects. It is noteworthy that the EDPB advocates for 'consider[ing] the implementation of more dynamic ways of informing data subjects of the (further) processing of their data' so as to avoid relying on the aforementioned exception.

It should be emphasized that no similar exception is provided under Article 13 of the GDPR, where personal data were collected directly from data subjects. It logically follows that in cases where the data controller intends to further process personal data for research purposes, the controller should ensure that appropriate measures are taken during data collection to meet its information obligations in relation to further processing.

In cases where it is not feasible to specify research purposes, it is incumbent on the controller to take further actions that permit consent to be valid through the implementation of relevant safeguards under Article 89(1) of the GDPR. The EDPB has further specified that it is necessary to narrow the research purposes of processing health data down to the extent possible, stating that 'broad consent' may not be relied upon to process such special category data for reasons of unknown future research. Broad consent may, however, be relied upon in cases where a research project falls within the scope of the broad consent provided, on the condition that additional safeguards are to be identified by the EDPB in its forthcoming Guidelines on processing personal data for scientific research purposes.

Before commencing research and development using health data to develop AI systems, organizations should carefully consider their obligations under Article 35 of the GDPR and assess the likelihood of risks to the rights of data subjects. The WP29 Guidelines on Data Protection Impact Assessment (DPIA) provide guidance on types of processing that are 'likely to result in a high risk' and furnish relevant criteria to this end. Organizations should also consider relevant white and blacklists provided by EU DPAs.

Of relevance, the Italian DPA (Garante) released the 'Decalogue for the provision of national health services through artificial intelligence systems' in September 2023. It emphasizes the implementation of data protection principles and safeguards, as outlined in Article 25 of the GDPR, in the design of AI systems for healthcare.

Care should also be taken to ensure that data used to train systems is of high quality, up-to-date, and accurate under the principle of accuracy laid down in Article 5(1)(d) of the GDPR. This is highly relevant to avoid undue risks resulting from their processing and the systems they are used to train.

Dr. Paolo Balboni Founding Partner

[email protected]

Noriswadi Ismail Of Consultant & Advisor

[email protected]

Davide Baldini Partner

[email protected]

Kate Francis Privacy & Ethics Researcher, Development & Communication Specialist

[email protected]

ICT Legal Consulting