Continue reading on DataGuidance with:

Free Member

Limited ArticlesCreate an account to continue accessing select articles, resources, and guidance notes.

Already have an account? Log in

New York: Overview of the automated employment decision tools law

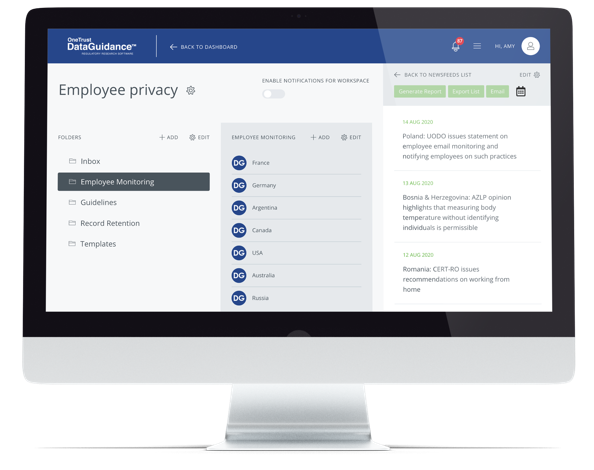

The New York City Council approved, on 10 November 2021, Bill Int 1894-2020 for a Local Law to amend the Administrative Code of the City of New York in relation to automated employment decision tools. Soon after, the bill was automatically enacted without a mayoral signature on 10 December 2021 and is due to take effect in 2023. In particular, the law regulates automated employment decision tools which score, classify, or otherwise make a recommendation, that is used to substantially assist or replace the decision-making process of an individual. OneTrust DataGuidance gives an overview of the law, its scope, and main provisions, alongside comments provided by Jessica Lee and Bianca Lewis from Loeb & Loeb LLP.

Scope and main provisions

This law applies to employers and employment agencies in New York City ('NYC') which use algorithms and automated decision tools to make 'employment decisions' which affect employment candidates. In addition, and in order to ensure that employers and employment agencies are compliant with the provisions of the law, employment candidates would need to successfully pass a bias audit on any algorithms and automated decision tools that they may use.

In this respect, Lee and Lewis provided interesting insights and explained some of the main provisions of the law, noting that they could be summarised as follows:

- "Automated employment decision tools: The law makes it unlawful for any employer or employment agency in NYC, to use automated employment decision tools to screen a candidate or employee residing in NYC, for an employment decision unless: a bias audit is conducted on such tools at least one year prior to the use of such tools; and a summary of the results of the most recent bias audit of such tools has been published on the employer's or the employment agency's website.

- Bias audit requirement: The bias audit is an impartial evaluation conducted by an independent auditor. It must include, but not be limited to, testing of the automated employment decision tools' disparate impact on federally protected classes of individuals on the basis of race, ethnicity, and binary gender.

- Notice requirements: A NYC employer and employment agency using an automatic decision tool to screen candidates for employment and employees for promotion, must provide a notice to each such individual residing in NYC, at least ten days prior to using such tools, which must include the following information:

- that an automated employment decision tool will be used to evaluate employees or candidates and that such candidate or employee may request an alternative selection process or accommodation;

- the types of job qualifications and characteristics that such tool will use in order to evaluate such candidate or employee; and

- if not provided on the employer's or the employment agency's website, a statement of information regarding the type of data collected by the tool, the source of the data collected, and the employer's or employment agency's data retention policy, which may be requested in writing. Note that an employer or employment agency must reply within 30 days of a written request for such information.

- Penalties: Any employer or employment agency that violates the provisions of the law, may be subject to civil penalties not more than $500 for the first violation and each violation occurring on the same day as the first violation, and between $500 and $1,500 for each subsequent violation."

The automated employment decision tools

The law refers to the prohibition of using 'automated employment decision tools' without a prior bias audit. However, this expression could easily fit several different software or computational processes that are involved daily in the corporate and office world, and could therefore be interpreted narrowly or widely depending on the circumstances. With the aim of clarifying what falls under the above definition, Lee and Lewis detailed that, "'Automated employment decision tools' include any computational process, whether derived from machine learning, data analytics, artificial intelligence, or statistical modelling, that gives a simplified output, like a score, recommendation, or classification, that will be used by an employer or employment agency to substantially assist or replace discretionary decision-making when making employment decisions that affect individuals".

With respect to what falls outside of the scope of the law, Lee and Lewis noted that those include "[…] decision-making processes that do not impact individuals, like a junk email filter, firewall, anti-virus software, or database. Tools that scan resumes, or scan facial expressions in connection with a hiring or promotion decision are some examples of the tools in scope for this [law]".

Company obligations to ensure compliance

This law will take effect on 1 January 2023 and, as is always the case with a new law, companies will now have to prepare themselves and be ready by that time in order to ensure that they will be compliant. In fact, as Lee and Lewis highlighted, "One of the most important tasks that employers and employment agencies will have to complete prior to the [law] going into effect is to conduct bias audits on their automated employment decision tools currently being used or that will be used on and after 2 January 2023".

Besides this, there are other several measures that the covered entities should implement. In particular, Lee and Lewis noted that employers and employment agencies in NYC "should also ensure from an operational standpoint that each such company (a) posts a summary of the results from the most recent bias audit conducted on such tools on their website; (b) has a mechanism for supplying notices to employees and candidates with information required under the [law]; and (c) has a mechanism to respond to written request for information about the type of data collected by the automated employment decision tool, the source of the data collected, and the employer's or employment agency's data retention policies, within 30 days, if such information is not posted to their respective company website".

Nature and scope of the bias audit

One of the main criticisms that this law has received lies in the nature and scope of the bias audit. In particular, the audit, as required by the law, is specifically addressed to reveal gender and ethnicity biases, thus leaving out of its screening other possible discrimination factors such as age or disabilities.

Nonetheless, Lee and Lewis highlighted that, "It is important to remember that the [law] specifies certain minimum requirements for the bias audit. The language of the [law] provides that the bias audit must include, but not be limited to, testing of the tools' disparate impact on protected classes of individuals with respect to race, ethnicity, and binary gender. Employers and employment agencies in NYC are still subject to federal, state, and local laws and regulations that prevent them from discriminating against protected classes of individuals on the basis of other factors, like age, religion, and disabilities, when making employment decisions, and should consider factoring those categories into the bias audit".

Conclusion

This law could play a major role in the artificial intelligence ('AI') framework in the job market, and is a strong stance for the NYC Council towards addressing the risk of algorithmic bias in this sector. Moreover, it could be a significant push forward algorithmic transparency as suggested by its disclosure requirements to inform individuals that they are being evaluated by a computer and where their data is going. In this context, Lee and Lewis stated that, "this is a step in the right direction towards making the employment and promotional process more transparent for employees and candidates".

At the same time, the law could be seen to have some weak points, in particular regarding the bias audit falling on the vendors of the tools to show that they comply with a set of requirements that may not be as strict as they should. As Lee and Lewis consider, "[We] do have a concern regarding the effectiveness of the bias audit. There is a possibility that most companies will only conduct bias audits that reveal issues related to race, ethnicity, and binary gender in an effort to comply with minimum requirements. [We are] also interested to see how this [law] will be amended or modified by cases where courts make a determination that the automated employment decisions tool did have a disparate impact on protected classes of individuals based on race, binary gender, or ethnicity, but the bias audits conducted during the normal course of business did not detect any such impact".

As such, there will be some time to see how companies approach their obligations to ensure compliance, as well as how the law will be enforced at a later date once it enters into effect.

Marcello Ferraresi Privacy Analyst

[email protected]

Comments provided by:

Jessica Lee Partner and Co-Chair of Privacy, Security, and Data Innovations

[email protected]

Bianca Lewis Associate

[email protected]

Loeb & Loeb LLP, New York