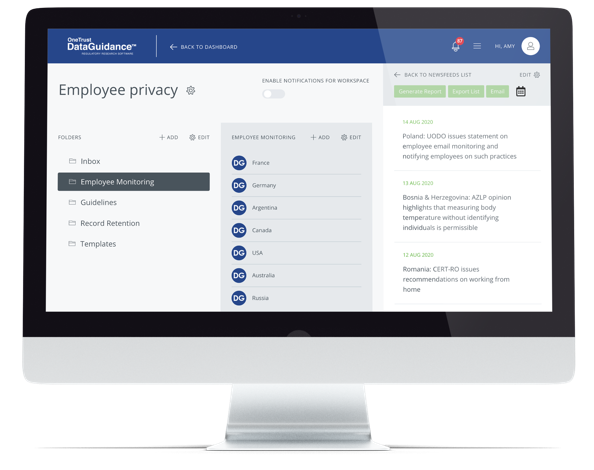

Continue reading on DataGuidance with:

Free Member

Limited ArticlesCreate an account to continue accessing select articles, resources, and guidance notes.

Already have an account? Log in

USA: Regulating the use of AI in employment decisions

New tools for employers to increase productivity and efficiency continue to evolve as artificial intelligence (AI) and automated decision-making become more sophisticated and prevalent. These tools are particularly common in the hiring arena, where employers can use technology to screen, track, and even communicate with applicants. Large companies that receive hundreds or thousands of applicants per week can save a lot of time by deploying a tool that, for example, scores each applicant based on how closely they match a job description or extracts and summarizes relevant information from applications and hiring materials.

Legislators are now beginning to regulate the use of such tools in the employment context. In the absence of federal regulation, it appears likely that the US will have a patchwork of regulations passed on the local and state level, similar to the current privacy regulation landscape. Laura Schwalbe, from Aurelian Law PLLC, evaluates the current regulation of AI in the employment context and how this may evolve.

The current regulatory landscape

California

Much like in the privacy space, California led the way under the California Consumer Privacy Act (CCPA), which directed the California Consumer Privacy Protection Agency (CPPA) to issue regulations on the use of automated decision-making technologies. The first draft of these regulations, released on November 27, 2023, would apply broadly to consumers (not just in the employment context) and give them the right to notification and the right to opt out of certain uses of automated decision-making technology, which is defined as 'any system, software, or process - including one derived from machine learning, statistics, or other data-processing or artificial intelligence - that processes personal information and uses computation as whole or part of a system to make or execute a decision or facilitate human decision-making. Automated decision-making technology includes profiling.' 'Profiling,' in turn, includes the automated processing of personal information to evaluate aspects of a natural person including performance at work.

The draft regulations mandate that businesses provide notice to consumers detailing the use of automated decision-making, including a plain language explanation of its purpose, a description of the right to opt out, a description of a consumer's right to access additional information about the use of automated decision-making with respect to the consumer, and a means by which the consumer can obtain additional, more detailed information. This may include the logic used, the intended output, how the business plans to use the output, and whether the use of automated decision-making has been evaluated for validity, reliability, and fairness.

On the topic of opt-out rights, the draft regulations include some provisions that would apply in the employment context. For example, businesses would be required to provide the ability to opt out of decisions that produce legal or similarly significant effects or profiling a consumer acting as an employee, independent contractor, or job applicant. Additionally, if a business makes a decision that, for example, denies a consumer an employment opportunity, the business would be required to notify the consumer that a decision was made using automated decision-making, that the consumer can exercise an access right for more information about the automated decision-making, and that the consumer can file a complaint with the CPPA and Attorney General if the consumer believes their privacy rights have been violated.

The CPPA Board held a meeting on these draft regulations in December 2023, and ultimately sent them back to a subcommittee for further revision. California's rulemaking process in this area is a lengthy one, meaning that these rules are unlikely to be final for some time. Nonetheless, employers subject to CCPA are on notice of the types of requirements likely to apply with regard to notice and opt-out rights. Some states that have passed broad privacy laws are taking a similar approach to CCPA, such as Colorado, Virginia, and Connecticut giving consumers the ability to opt out of profiling connected to decisions that produce legal or similarly significant effects, which includes employment opportunities.

New York City

One jurisdiction that has passed legislation specifically governing the use of AI in employment decisions is New York City. Enforced as of July 2023, Local Law 144 of 2021 (NYC 144) covers the use of automated employment decision tools (AEDTs) to make employment decisions. An AEDT is defined to cover any computational process derived from machine learning, statistical modeling, data analytics, or AI that uses a simplified output (such as a score or recommendation) to substantially assist or replace discretionary (i.e., human) decision-making.

'Employment decisions' are somewhat limited in scope under NYC 144. The law only applies to decisions to hire or promote an employee. It would not apply, for example, to decisions on compensation or firing. Employers who use AEDTs for such employment decisions are required to provide notice to applicants and employees prior to using an AEDT for a covered employment decision. The notice must give individuals the ability to request an alternative selection process.

NYC 144 obligates employers to hire an independent and impartial auditor to conduct a bias audit a year before using any AEDT, and then continue auditing on an annual basis. Once the audit is complete, employers are obligated to publicly post certain details, including the AEDT distribution date, audit date, and a summary of the bias audit. Some companies are also slowly beginning to issue notices in line with NYC 144 requirements, explaining their use of an AEDT to process employment applications to ease the burden on recruiters and hiring managers by recommending or highlighting candidates for consideration and including links to independent auditor reports.

Violations of NYC 144 are punishable by civil penalties up to a maximum of $500 for an initial offense and $1,500 per violation for subsequent violations. There is no private right of action under NYC 144, so aggrieved employees or applicants cannot directly sue an employer for perceived violations.

Although much more targeted in scope than the CCPA and similar state privacy laws that cover automated decision-making, NYC 144 does overlap in a few key areas. Specifically, regulations in this area provide for notice to potentially affected individuals and some form of opt-out or election of an alternative process.

Federal-level initiatives

On the federal side, the U.S. Equal Employment Opportunity Commission (EEOC) has made its views on the use of AI in hiring clear. In 2021, it launched an initiative designed to 'ensure that the use of software, including artificial intelligence (AI), machine learning, and other emerging technologies used in hiring and other employment decisions comply with the federal civil rights laws that the EEOC enforces.' In May 2023, the EEOC issued a technical document, 'Assessing Adverse Impact in Software, Algorithms, and Artificial Intelligence Used in Employment Selection Procedures Under Title VII of the Civil Rights Act of 1964,' intended to help prevent discrimination against job seekers and workers.

Also on the federal level, the White House included some statements on employers' responsibility to use AI in its October 2023 Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence:

- 'The critical next steps in AI development should be built on the views of workers, labor unions, educators, and employers to support responsible uses of AI that improve workers' lives, positively augment human work, and help all people safely enjoy the gains and opportunities from technological innovation.'

- The Order also mandates that the Secretary of Labor 'develop and publish principles and best practices for employers that could be used to mitigate AI's potential harms to employees' well-being and maximize its potential benefits. The principles and best practices shall include specific steps for employers to take with regard to AI.'

Further regulation

Employers can also expect other jurisdictions to pass similar legislation, whether as a standalone regulation like NYC 144 or as part of a broader privacy regulation like CCPA. For example, New York State's Assembly had a bill proposed on February 28, 2024, which is similar in scope to NYC 144, applying to AEDTs used to filter employment candidates or prospective candidates for hire without relying on assessments by individuals. This bill would require annual disparate impact analyses to assess the impact of any AEDT. Unlike NYC 144, however, these disparate impact analyses would not be publicly filed and could be 'subject to all applicable privileges,' such as attorney-client privilege if conducted by an attorney. Employers would, however, be required to make publicly available a summary of the most recent disparate impact analysis and the distribution date of the AEDT tool. This summary must also be provided to the New York Department of Labor.

New Jersey also has two bills, introduced in February 2024, that would regulate AI. Assembly Bill 3854 would regulate the 'use of automated employment decision tools in hiring decisions.' Described in more detail here, this bill is similar in scope to NYC 144, although it would also apply to companies seeking to sell or offer for sale AEDTs, not just to employers seeking to use AEDTs. Assembly Bill 3911, on the other hand, would apply in the narrow situation where an employer conducts a video interview during the hiring process and uses AI to analyze the video. This bill would require notice to an applicant before the interview, information to the applicant explaining how the AI works, and written consent from the applicant to use such AI.

Employers can expect regulation in this area to rapidly expand, whether in the form of comprehensive federal legislation or, more likely, piecemeal regulation like we're seeing already. Overall, however, even employers not currently subject to regulation should exercise caution when selecting and using any form of AI or AEDT in connection with employees or prospective employees.

Laura K. Schwalbe Partner

[email protected]

Aurelian Law PLLC, New York